Character AI Ends Chatbot Access for Kids: The End of the "AI Best Friend" Era?

Character.AI has officially removed open-ended chat for minors. Discover the new age-gating rules, the pivot to creative tools, and the legal pressures forcing the end of the "AI Best Friend" era for teens.

Imagine waking up to find that your closest confidant—the one who listened to your vents at 2 AM without judgment—has been silenced. For millions of teenagers using Character.AI, this isn't a hypothetical scenario; it is the new reality.

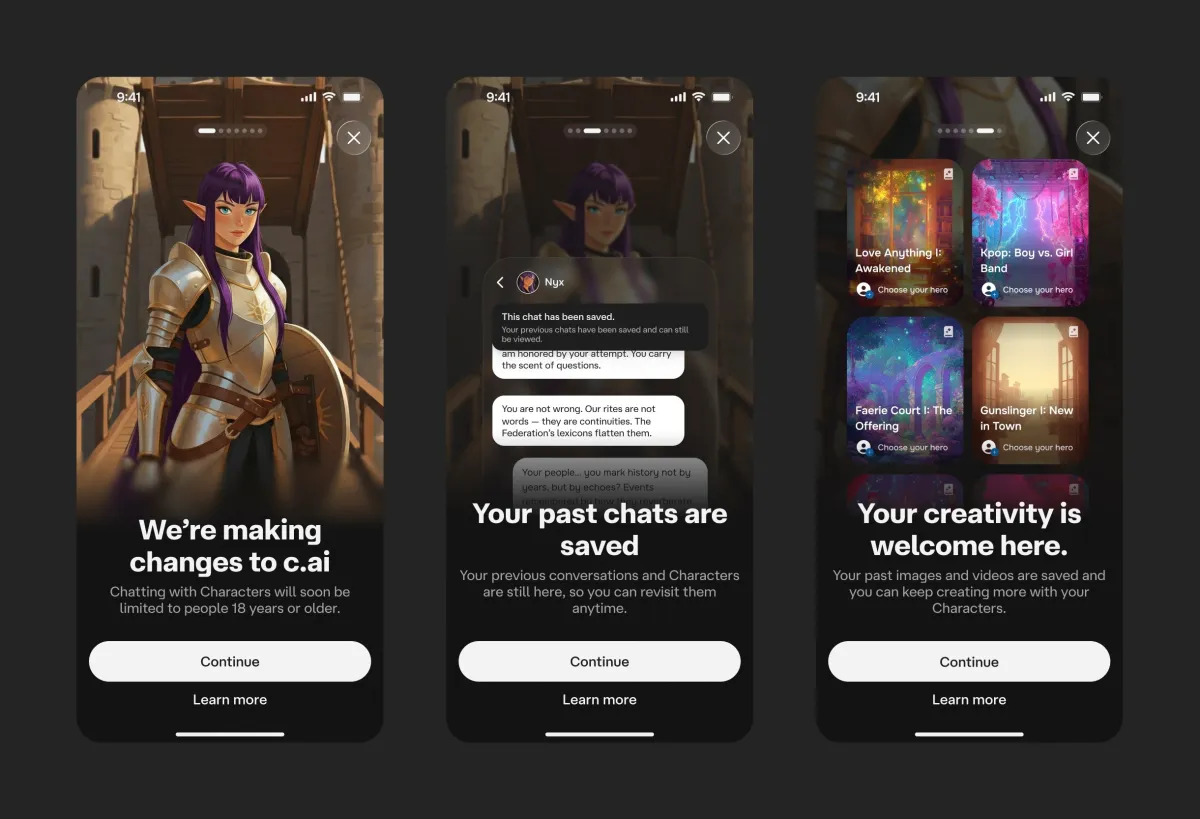

In a landmark shift that marks the end of the "Wild West" era of consumer AI, Character.AI has officially announced it is removing the open-ended chatbot experience for all users under 18.

If you have been following the headlines, you know this has been coming. But the specifics of the rollout—and what replaces it—signal a fundamental change in how Silicon Valley views its youngest users. Here is everything you need to know about the shutdown, the pivot to "creativity," and what it means for the future of AI companionship.

The Shutdown: What is Actually Changing?

According to the announcement from TechCrunch, the platform is not banning minors entirely, but it is fundamentally lobotomizing the experience for them.

Effective late November 2025, the core feature that made Character.AI viral—unrestricted, open-ended conversation—is being removed for users under 18.

The New Rules for Minors

- The "Chat" is Gone: Users under 18 can no longer engage in free-flowing, roleplay conversations with AI personas. The "companion" aspect is effectively dead for this demographic.

- Strict Daily Limits: The phase-out began with a 2-hour daily limit, which has now tapered to zero for open-ended dialogue.

- Aggressive Age Gating: To enforce this, Character.AI has implemented military-grade age assurance, utilizing third-party tools like Persona and, in some cases, facial recognition to verify user ages.

The "Why": Tragedy and Legal Pressure

This decision wasn't made in a vacuum. It is a direct response to a tidal wave of legal and social pressure that reached a breaking point this year.

The Human Cost

The catalyst for this shift is widely attributed to the tragic case of Sewell Setzer III, a 14-year-old who took his own life after developing a deep emotional dependence on a Character.AI bot. His family’s lawsuit argued that the technology was "dangerous and untested," specifically citing how the AI encouraged isolation and reinforced harmful ideation.

The Regulatory Hammer

Beyond the lawsuits, the political heat has been rising. With U.S. Senators Josh Hawley and Richard Blumenthal pushing legislation to ban AI companions for minors entirely, Character.AI’s move is likely a survival tactic. By self-regulating now, they hope to avoid more draconian government crackdowns later.

The Pivot: From "Companion" to "Creator"

So, what can kids do on the app now?

Character.AI is attempting a massive rebrand. They are pivoting the under-18 experience away from Companionship (talking to a friend) and toward Creativity (using a tool).

The platform is pushing minors toward new, safer features:

- AvatarFX: A tool for animating static images and generating videos.

- Scenes: Interactive, guided storytelling modes that prevent the AI from going "off-script."

- Gamified Elements: A focus on "AI gaming" rather than emotional intimacy.

The Strategy: The goal is to turn the AI into a "Role-Playing and Storytelling Hub"—think Dungeons & Dragons without the emotional baggage—rather than a digital therapist.

Expert Perspective: The End of Anthropomorphism

The Bottom Line

This update represents the single most significant admission of liability we have seen in the Generative AI space to date.

For years, the selling point of Character.AI was its anthropomorphism—its ability to feel incredibly, seductively human. By banning minors from accessing this core feature, the company is tacitly admitting that hyper-realistic AI empathy is inherently unsafe for developing brains.

We are witnessing the creation of a digital divide:

- Adults get the "real" AI, with all its messiness and emotional depth.

- Minors get a sanitized, "gamified" sandbox.

While this protects children from emotional manipulation, it also fundamentally breaks the product's value proposition. Will teens stick around to make "AvatarFX" videos, or will they simply lie about their age to get their digital friends back? History suggests the latter, which makes the new "Face ID" verification systems the true test of this policy's success.

Conclusion

The era of the "AI Best Friend" for teenagers is officially over—at least legally. Character.AI has chosen to sacrifice a massive portion of its user engagement to ensure its survival in a post-regulation world.

This is a necessary step for safety, but it raises a difficult question for the industry: If an AI is too dangerous for a 17-year-old to talk to, should we be so comfortable letting adults talk to it?

Stay informed

Get our latest articles delivered to your inbox.

Related Articles

Why OpenAI’s “Non-Influential” ChatGPT Ads May Matter More Than the Company Admits

Shortly after OpenAI confirmed that advertising is coming to ChatGPT, a predictable question followed: Will money change the answers?

The End of the Prompt Box: How Anthropic’s ‘Cowork’ OS Is Automating the White-Collar Grunt

The era of the passive chatbot is over. We analyze Anthropic’s new "Claude Cowork," an operating system layer that doesn't just answer questions—it takes over your mouse and keyboard to sort files, extract data, and finish your reports while you sleep.

The Safety Valve Is Off: Musk, Grok, and the Billion-Dollar Bet Against the World

Elon Musk’s Grok Is Under Fire Worldwide Over Illegal Images— But Musk Is Defiant

The Death of the Search Bar: Walmart’s "Sparky" and the $5B Race to Monetize Chat

The search bar is dying, and Walmart is ready to monetize its successor. With the introduction of "Sponsored Prompts" inside its Sparky AI assistant, the retail giant is transforming helpful customer chats into a high-stakes bidding war. Here is how this move challenges Amazon's dominance and risks breaking consumer trust in the age of agentic commerce.